Figure 1: Proyecto Synco, Chile 1971–73.

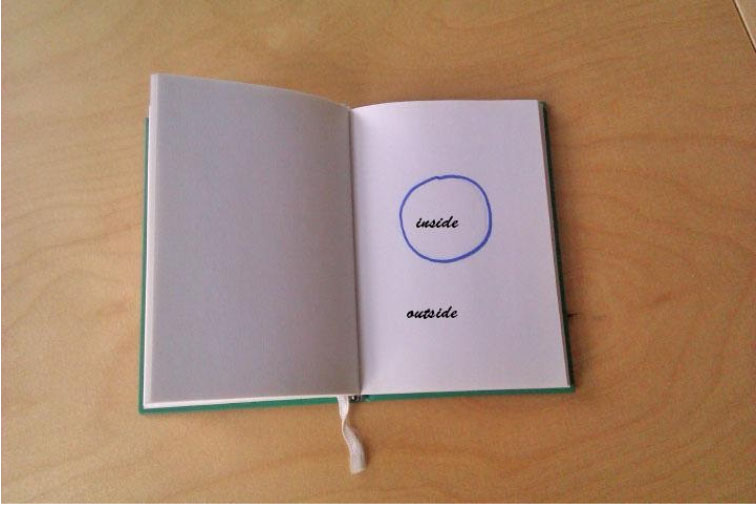

Figure 2: Closed system as an example of an abstractive (neglective) fiction after Hans Vaihinger.

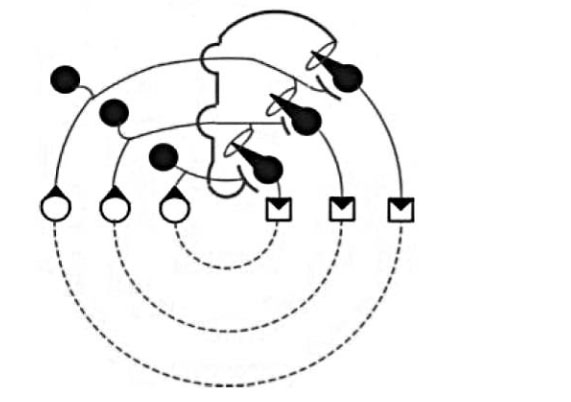

Figure 3: Warren S. McCulloch, Walter Pitts; (1943)

A Logical Calculus of the Ideas Immanent in Nervous Activity

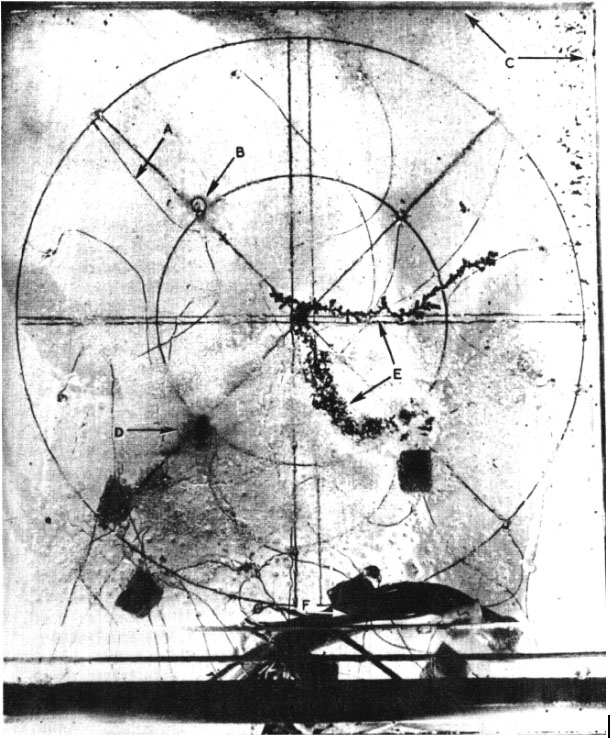

Figure 4: Gordon Pask, photograph of an electrochemical process, from “Physical analogues to the growth of a concept.” (1958)

Abstract of a talk given at the Victoria Summer Camp (Romania)

This article addresses different forms of fiction that are involved in cybernetic thinking. While cybernetics has long since lost its position as a leading transdisciplinary science, cybernetic thinking, its terminology and approaches, are still present in various contemporary scientific fields. In addition, the vision of a better future enabled by new technologies is perhaps more than ever the predominant utopia in our society. The most popular utopias of our time—artificial intelligence and the so-called singularity, transhumanism, nanotechnology, and synthetic biology, but also quite small and technologically simple things such as blockchain systems—all show the characteristics of technological utopias. As the early Chilean Project Cybersyn (conducted during the presidency of Salvador Allende in 1971–73, see Figure 1) shows, cybernetics was involved in an interplay between utopia, politics, and technology from the very beginning. The social role of technologies and their political and economic instrumentalization is obviously not so different nowadays. Although there are plenty of examples that could clearly illustrate the role of cybernetic fictions as a medium for social utopias, the focus of the presentation is not cybernetic fictions such as the Cybersyn project and their role in society. Instead it concentrates on (1) fictional imprints that are inevitably part of our scientific and technological thinking, as philosopher Hans Vaihinger showed at the end of the 19th century and (2) how an idea by the cyberneticist Gregory Bateson can help us understand that if we wish to master today’s problems, not only do our technological utopias and aims for the future have to change, but also the way we think about technology.

In The Philosophy of As If (1911), Hans Vaihinger1 argues that as human beings we can never know the reality of the world. As a consequence we construct systems of thought and then take them for reality. In everyday live, but also in science, we behave “as if” the world matches our fictions. But according to Vaihinger there is nothing wrong with these fictions (semi-fictions or half-fictions as Vaihinger calls them): “An idea whose theoretical untruth or incorrectness, and therewith its falsity, is admitted, is not for that reason practically valueless and useless; for such an idea, in spite of its theoretical nullity may have great practical importance2.” Since we generally construct our theoretical models on the basis of fictions, it is immediately clear that cybernetics must also be based on fictions and we can thus ask: what are the fundamental fictions of cybernetics? At this point it must suffice to name a few. In fact, it turns out that the important fictions on which the field of cybernetics is based are more or less identical with the basic notions of cybernetics. Examples that Hans Vaihinger would call abstractive (neglective) fictions are: open and closed systems, the concept of information, feedback, or black boxes. Figure 2 shows a typical abstracted outline of a closed system, where any interaction between the inside and the outside of the system is forbidden: nothing crosses the border. But we know that closed systems do not exist in reality (except maybe the universe as a whole). Whether we consider a system open or closed depends on our point of view and the questions we wish to examine and answer. In this sense they are useful fictions.

Cybernetics began working, on the basis of its fundamental fictions, towards mastering human thought. This is a highly reflexive project, already practised through 2000 years of philosophy, where a being attempts, with its restricted means, to understand its own conditions. The difference this time was that this was to be achieved technologically: a thinking brain was to be built. Even before the term Artificial Intelligence was coined by the Dartmouth Summer Research Project on Artificial Intelligence (initiated by John McCarthy and held in 1956), cybernetics had already returned fundamental results in relation to this problem. If we assume the following three points were already known before the cybernetic enterprise set in, then there was only one final building block missing before the conclusion that the human brain could easily be built using electrical circuits could be reached.

The tradition of philosophy, which reduced thought and human intelligence to logical inference (another neglective fiction),

The work of George Boole who, as early as 1854, provided a formalism that described logic as mathematical symbol manipulation,

The work of Claude Shannon who in his master’s thesis at the Department of Electrical Engineering at the Massachusetts Institute of Technology (MIT), Cambridge, 1937, entitled A Symbolic Analysis of Relay and Switching Circuits, describes how logical operations can be realized by electrical circuits.

The missing fictional keystone was delivered by the cyberneticist Warren McCulloch in 19433. According to McCulloch, the synapses in the human brain are no more than switches that work exactly like the formal-logical operations described by Boole (see figure 3). As soon as we accept the abstractive fictions involved and furthermore assume that the ontology of the world is formally describable without any loss, then the rest should not be a long time in coming. This was, at least, the general conviction when the first wave of AI, now known as good old-fashioned Artificial Intelligence (GOFAI), began. In this way, cybernetics reduced thought to purely mechanical operations that are explicable and totally understandable with nothing left over as secret. Today we know that this was just fiction.

The fact that GOFAI was destined to run into problems and that making a brain might prove a little more difficult could have been gleaned by taking a deeper look at philosophy, but also from the work of another cyberneticist. The English cyberneticist (anthropologist, social scientist, semiotician) Gregory Bateson wrote an essay in 1964 titled The Logical Categories of Learning and Communication4 which he submitted to the “Conference on World Views” as a position paper. It begins as follows: “All species of behavioural scientists are concerned with ‘learning’ in one sense or another of that word. Moreover, since ‘learning’ is a communicational phenomenon, all are affected by that cybernetic revolution in thought, which has occurred in the last twenty-five years”.

For him, learning is a central capability of living creatures and is characterized by change of some kind. But the delicate matter is obviously what kind of change. Change denotes process. But, as Bateson points out, processes are themselves subject to change. Processes may accelerate, slow down, or may undergo even deeper types of change such that at a certain point we must say “this is now a different process”. Starting from this rationale, Bateson develops hierarchically ordered categories of learning beginning with zero learning (no learning) up to a principally open number of levels. All learning beyond level zero is in some degree stochastic, i.e. it contains components of trial and error. The next level of learning can be reached by generalising the process of learning of the current level. In his own words: “Zero learning is characterized by specificity of response, which—right or wrong—is not subject to correction. Learning I is change in specificity of response by correction of errors of choice within a set of alternatives. Learning II is change in the process of Learning I, e.g., a corrective change in the set of alternatives from which choice is made, or it is a change in how the sequence of experience is punctuated5.” And so on. Within this terminology, the classical conditioning examined by Ivan Pavlov belongs to Learning I. But the present machine learning algorithms using artificial neural networks do not go beyond level I either. The problem of learning levels is not even recognized by most scientists in the field of AI. Up to now there are only few attempts to exceed level I. Gordon Pask’s experiments with electrochemical processes in the 1950s, which tried to resolve the frequency spectrum of the sound of the environment of the system, can be seen as an early attempt to realize learning beyond level I (see figure 4). Here, no restrictions were predetermined as to what the system had to learn.

A very important notion in Bateson’s learning hierarchy is what he calls repeatable context: “Without the assumption of repeatable context (and the hypothesis that for the organisms which we study the sequence of experience is really somehow punctuated in this manner), it would follow that all ‘learning’ would be of one type: namely, all would be zero learning6.” It is quite clear that Bateson’s theory is also a fiction in the sense that we will not find hierarchically organised learning levels in our brain—levels between which we can switch like in Russell’s hierarchy of logical types, to which Bateson explicitly refers. Things such as learning categories are phenomena that emerge when language reflects on itself. If we presume that universals (general terms) are human constructions then it becomes obvious that the hierarchies of learning and thinking are purely linguistic phenomena. But whether we only consider a given set of decision alternatives or jump out of the box and reflect on the general emergence of alternatives makes a big difference to our ability to act in the world. For that reason Bateson is still known in management and pedagogics today, although he is no longer recognized in informatics. Regarding AI, a consideration of learning levels would be useful because they show very precisely the poor progress we have made so far, not only with GOFAI but also with the new deep learning technologies.

What can we learn from the different cybernetic fictions we have presented thus far? What conclusions can be drawn for the society of today? Firstly, fiction is involved in any human activity. Our behaviour is based on our imagination and models we make up regarding ourselves and the world we live in. Fiction is therefore also the driving force in our technoscientific shaped living environment. Secondly, by looking at Bateson’s levels of thinking—even though they are themselves fictions—we can understand that new imaginations, new narratives and particularly new notions and concepts are required to invent and implement more elaborate levels of thinking about the use of technology in our society. Technoscience urgently needs concepts that go beyond meaningless progress, innovation and a simplified technical rendering of the world as a discrete, well-defined object repository with unambiguous relations between them. Notions that allow us to invent higher level fictions about ourselves and our future.

NOTES:

- Hans Vaihinger, The Philosophy of As If: A System of the Theoretical, Practical and Religious Fictions of Mankind (Random Shack, 2015).

- Ibid., Preface.

- Warren S. McCulloch and Walter Pitts, “A Logical Calculus of Ideas Immanent in Nervous Activity”, in Bulletin of Mathematical Biophysics 5 (1943): 115–33.

- Gregory Bateson, “The Logical Categories of Learning and Communication,” in Steps to an Ecology of Mind. Collected Essays in Anthropology, Psychiatry, Evolution, and Epistemology (San Francisco: Chandler Publishing Company, 1972).

- Ibid., 298.

- Ibid., 293